Table Of Content

It means a lot to us and it helps others easily find this article. For more updates follow us on Medium or follow me on Github. For the most up-to-date list of available options and entity have a look at API Spec. If all of your entities are grouped under the same directory, you can also provide a relative path to entities option and TypeDORM will auto load all the entities from a given path. Once this is done we need aws-sdk which TypeDORM uses to communicate with the DynamoDB table. The JSON model from NoSQL Workbench and the resources part of the serverless configuration file can be located here.

Key Design Strategies

It is the evolution of the adjacency list to a more complex pattern. You do not store just relationships but also define the type of relationship and hierarchy of the data. It is useful when you want to store a graph structure, for example, for a modern social networking app.

Approaching NoSQL design

Note that this isn’t an excuse to avoid learning how DynamoDB works! You shouldn’t model DynamoDB like a relational database, and you should learn DynamoDB data modeling principles. To be able to add a different access pattern for the same data set, I've used a global secondary index. Global secondary indexes (or GSIs) allow you to choose different primary keys and therefore have different querying options for the same dataset.

NoSQL Workbench for Amazon DynamoDB

How Zalando migrated their shopping carts to Amazon DynamoDB from Apache Cassandra Amazon Web Services - AWS Blog

How Zalando migrated their shopping carts to Amazon DynamoDB from Apache Cassandra Amazon Web Services.

Posted: Wed, 11 Oct 2023 07:00:00 GMT [source]

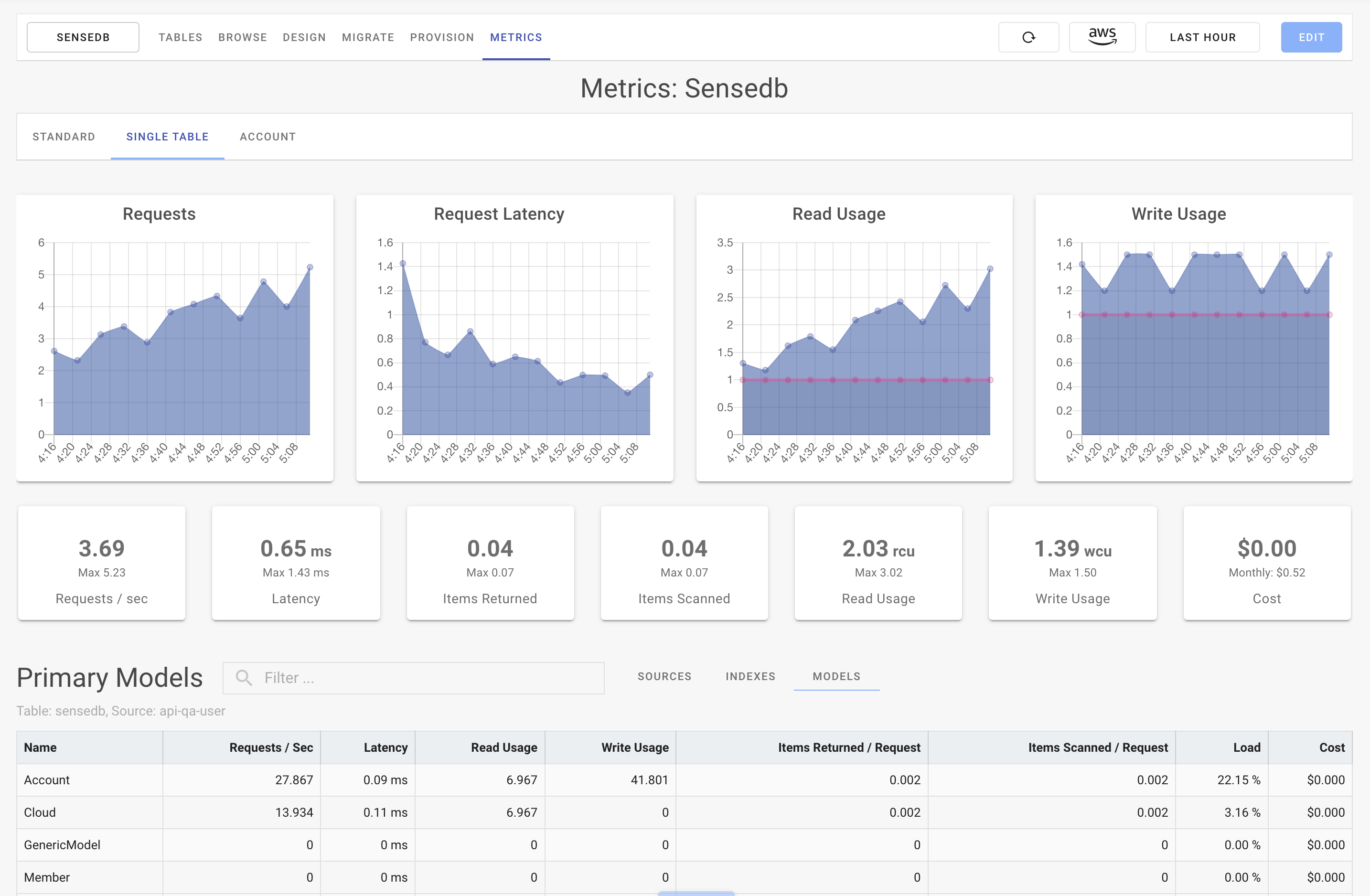

It is a combination of all the tricks described at the beginning of the relationship chapter. Then you play with sort keys and GSI to see the other part of the relationship and achieve other access patterns. DynamoDB is the beast that combined with the right design patterns unlocks the potential of unlimited scalability, performance, and low price. Its feature set is limited, but with the correct design patterns, mainly based on prevailed single table design, you can cover almost all use cases that are expected from an OLTP system. At SenseDeep, we’ve used DynamoDB, OneTable and the OneTable CLI extensively with our SenseDeep serverless developer studio.

You might wonder why the PK and SK for user metadata are the same, but you'll see why in the following table screenshot. Another key difference is that relational databases are traditionally designed to run on a single server, and scaling beyond a single server's capacity can be an involved process. DynamoDB scales by breaking data into 'shards' across different servers automatically, allowing it to scale beyond the bounds of a single server seamlessly. This automatic sharding means that your queries must always include the unique identifier (or partition key) for the item you're looking for, so DynamoDB can determine which shard your data is on. DynamoDB was built for large-scale, high-velocity applications that were outscaling the capabilities of relational databases.

KSUID is a 27 characters long string that combines a timestamp and an additional random key. An additional key is just to make sure the same key is deduplicated in some rare scenario. In the next section, we will have a look at the relational data set that we will be working with. You can always refer back to Forrest Brazeal’s article any time you feel lost.

This use of a B-tree on subsets of your data allows for highly efficient range queries of items with the same partition key. In this post, we’ll talk about single-table design in DynamoDB. We’ll start off with some relevant background on DynamoDB that will inform the data modeling discussion. Then, we’ll discuss when single-table design can be helpful in your application. Finally, we’ll conclude with some instances where using multiple tables in DynamoDB might be better for you.

More about TypeDORM

Under the hood, DynamoDB functions like a scalable, optimized hash table. Each record is placed into a “partition” based on its Partition Key and sorted within that partition based on its Sort Key. With only the Partition Key, we can query everything within that partition. However, by combining the power of the DynamoDB query API and our intelligent single table design, we can efficiently and consistently retrieve the precise data we need.

In this example, we are limiting changes of the document only to users that are editors. If we want to read all logs for a particular date, we need to execute 4 reads. We put a timestamp into the sort key so that we can limit the results to time range. Reads are slower, but the main point is to avoid hot partition.

The trick is to move the large portion of data into separate items and leave the only summary in the source table. That works well with most applications where you only want to see the data's extract, and when you click the item, you get all of the data. This pattern requires having a special item (only one) in the database with counting as its whole purpose. You execute the request to increment the number in the update expression. You must set the return value UPDATED_NEW to retrieve the new number. That is a random string that is long and subsequently unique enough that there is a guarantee it will not be repeated.

This is useful for limiting the amount of data returned at once to the client, since there can be many registrations. DynamoDB streams guarantee at least once delivery, which means it could receive the same event twice. You should make your processing idempotent, taking into account possible multiple triggering of the same event.

Now that we have defined all the required access patterns and how to meet each criterion, let’s build a summary table that should help us when we start to write some code. I am pretty familiar with relational databases and how to model our entities on them. But when we start to work with NoSQL databases we need to change the ways we model and represent our data. If we were to use a relational database, every entity from the above diagram would’ve had its own table, but since we’re usingDynamoDB we’re going to store all that data into a single table. As with most things in application development, there are lots of solutions to the same problem. While I'm sure there are cleverer ways to design for my access patterns, as a first stab at single-table design, this model fully meets my application's requirements!

By adopting this approach, you harness DynamoDB's inherent flexibility, allowing you to navigate through your data effortlessly. This design choice aligns with the philosophy of Single Table Design, emphasizing simplicity and ease of use in modeling complex relationships within DynamoDB. Iterate your design and continue to improve it before actually putting the application into use.

The I/O from my application to DynamoDB is the slowest part of my application’s processing, and I’m doing it twice by issuing two, separate, sequential queries, as shown in the preceding Figure 3. One thing I always tell people working with DynamoDB is “Do the math.” If you have a rough estimate of how much traffic you’ll have, you can do the math to figure out what it will cost you. Or, if you are deciding between two approaches to model the data, you can do the math to see which one is cheaper.

As we discussed the key concepts of DynamoDB in the previous post, it is now time to dive into how to apply single-table design strategy with DynamoDB. Single-table design is the most cost-effective💰 and efficient way🚀 for using DynamoDB. In this post, we will look at how to model an online library application using Single-table design. Storing multiple versions of the document is sometimes needed. The problem is if the document is big and you pay a lot of WCU for writing it.

No comments:

Post a Comment